From SEO to GEO - How to stay visible in the era of AI search

AI-powered search engines like ChatGPT, Claude, Gemini, and Copilot are reshaping how people find information online. Is traditional SEO still relevant?

Jan Lemmens

Solution Manager CXM

Content Science

Jan Lemmens

Solution Manager CXM

The way users interact with online content is transforming at an increasingly rapid pace. Major players like Google, OpenAI, and Microsoft are pushing towards new information retrieval paradigms, introducing AI-driven features that reshape user expectations and behavior. Chatbots and RAG-based assistants were just the beginning of this shift. On the horizon is agentic AI, which doesn't just search or summarize content; it interprets it, acts on it, and even makes decisions on behalf of users. This evolution challenges established content strategies and requires new approaches to manage and publish content.

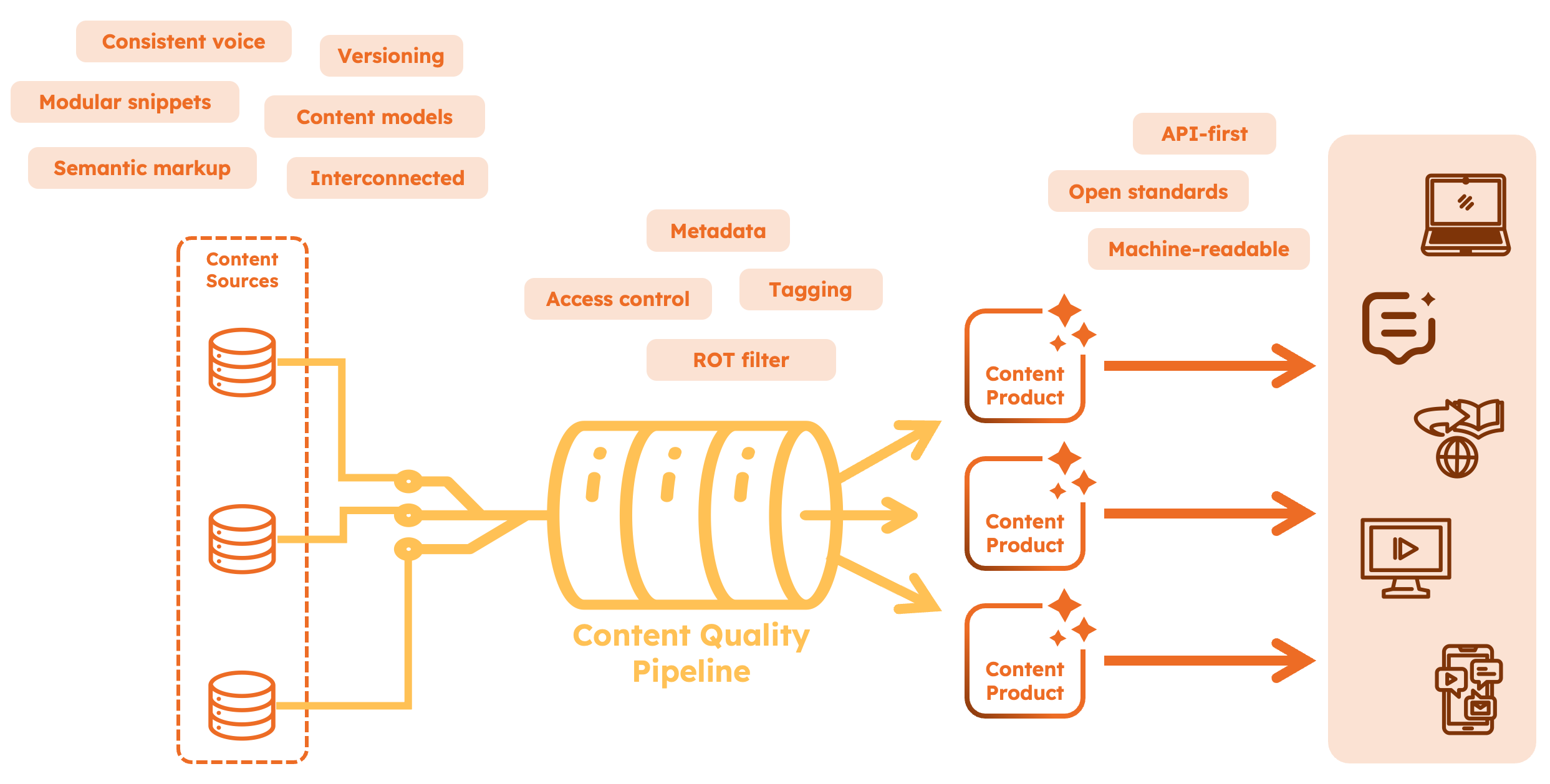

Last month in our post The hidden bottleneck in your AI initiative: Content Chaos, we discussed several steps for organizations to prepare for AI use cases by improving content governance. Key elements included the initiation of a Content Program and the production of high-quality Content Products. In this post however, we will go deeper into the quality criteria of AI-optimized content itself, typically implemented as steps in a Content Quality Pipeline. We will break down what “AI-ready” content means, why traditional CMS setups struggle, and how to adapt your organization with structured content, metadata, and quality monitoring.

Google’s AI Overviews and AI Mode are real-world examples of the aforementioned shift, changing the way information is presented and consumed, leading to a decline in traditional click-through rates to websites. AI Overviews already sends much less organic traffic to content sources, and AI Mode will likely supercharge that trend.

Unlike AI Overviews, AI Mode replaces traditional search results altogether by directly answering your question through a conversational UI, effectively creating a miniature multi-modal article in real-time, customized for your context. The effect? People aren’t just browsing links anymore. They’re asking questions and expecting concise, accurate answers right in their preferred interface. This isn’t a future trend. It’s happening now, as AI Mode was rolled out in the US in May 2025. A recent survey from Bain concludes that about 80% of consumers now rely on “zero-click” results in at least 40% of their searches, reducing organic web traffic by an estimated 15% to 25%.

AI Mode UI impression, courtesy of Google Inc.

It is expected that users will increasingly find answers without leaving their AI assistant of choice, be it Google, OpenAI or another platform yet to emerge. This means organizations will lose control over the way their information or services are delivered to its customers.

It also means we will see a transition from traditional web analytics to metrics that give insight into whether and how your organization is surfaced in AI answers. Launched this week, Adobe LLM Optimizer is an example of such a tool, providing real-time insights into agentic traffic.

AI-powered search engines like ChatGPT, Claude, Gemini, and Copilot are reshaping how people find information online. Is traditional SEO still relevant?

Jan Lemmens

Solution Manager CXM

As a long-term consequence, we are expecting the transition to a “Machine Web”, in which content will be mainly consumed by AI bots instead of humans. As users increasingly rely on AI-generated summaries for quick answers, businesses must adapt their content strategies to remain visible and relevant. Eventually, publishers will likely have the ability to feed their content directly to AI models to facilitate this. So the architecture of Web as we know it today will dramatically shift going forward.

Assistants provided by major platform players will be the primary interaction surface, connecting to your organizational data and tools in the background. We already see open standards emerging for this new era, with Model Context Protocol (MCP) at the forefront. MCP was developed by Anthropic as a way for Large Language Models (LLMs) to talk to data sources and tools, with the goal to enable interoperability in agentic workflows. In the meantime, Google and OpenAI have announced MCP support. Next to MCP support, Microsoft also announced NLWeb, an experimental framework to help you add a conversational interface to your websites and at the same time enable MCP support so AI agents can directly connect to your web content.

So which steps can your organization take to prepare for this new world? It appears there are important analogies to be drawn here with Search Engine Optimization (SEO). For the past decades, SEO was all about ranking and being at "position zero" in search results. With these new AI-based systems, it’s about being part of AI answers. And increasingly, that answer doesn’t even link back to your site. If your product or service isn’t represented correctly in structured, AI-readable content, it simply doesn’t exist in that funnel.

So what are the criteria for AI-ready content? In essence, it is content that machines can understand, retrieve accurately, and present with confidence. That means:

These qualities overlap with SEO best practices, but with a twist: you’re now optimizing for machine interpretation, not just ranking.

Indeed, as Google describes in its documentation, there are currently no additional requirements to appear in AI Overviews or AI Mode, nor other special optimizations necessary. Following SEO best practices will bring you far along the way.

In the short term, this is logical, since these new AI capabilities need to work with the content formats already in use today. In the long term however, we expect additional optimizations to be enabled, like e.g. directly exposing your content to AI systems in machine-optimized formats (e.g. Markdown).

Implementing an AI use case often comes down to solving a content problem. Let's dive into the importance of a solid content strategy for maximizing the success of your AI initiatives.

Jan Lemmens

Solution Manager CXM

For your content to truly shine in the long-term, it needs more than just a clear headline and good SEO. It has to be organized in a way that machines can understand and use without guessing. If your content is locked in PDFs or scattered across long-form pages, AI tools may extract irrelevant text, miss context, or produce incorrect summaries.

Most content on the Web today is still created and published through traditional content management systems, which focus on WYSIWYG page composition. While suitable for human browsing, this structure isn't optimized for the way AI systems retrieve data.

AI tools that use methods like Retrieval Augmented Generation (RAG) rely on breaking content into chunks as part of their internal architecture. However, if content is locked in page-based formats, that chunking process is often crude, splitting text by token length or paragraph without understanding meaning.

This results in a best effort from AI systems to interpret your content, with inaccurate or vague results.

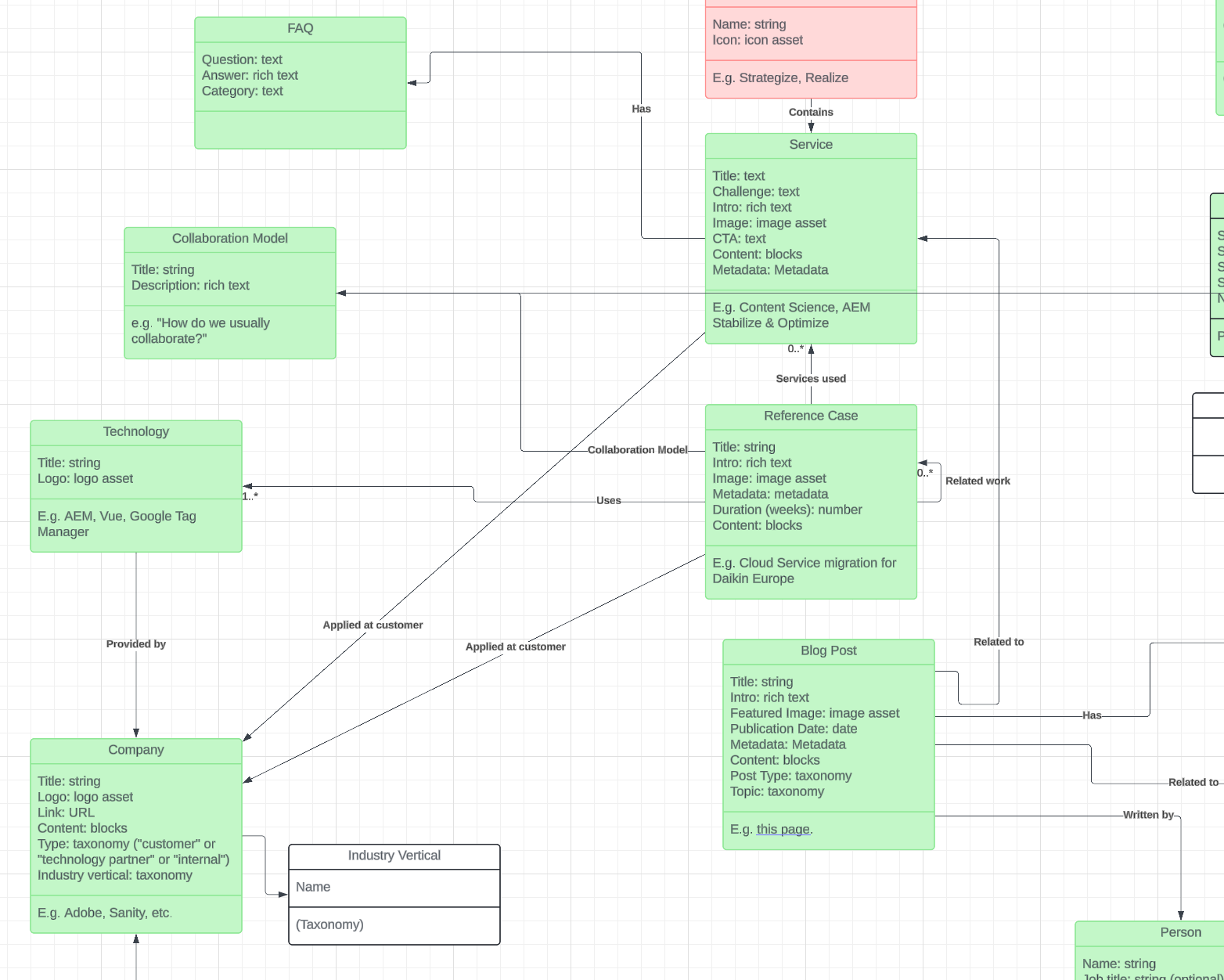

Unlike page-based systems, headless CMSes use content models as their primary way of representing content: instead of big blobs of content, authors work with a structured repository of content types and interconnected entities, each having clearly defined fields like product name, description, features, compatibility, support steps and FAQs (see example in the diagram below). This structure makes it easier for AI systems to pull exactly what they need, when they need it, whether for use in a chatbot, an AI summary or a voice assistant.

Early content model for AmeXio Fuse (as an example)

It also means your content becomes reusable across channels: the same product info can show up in a support flow, a help article, or an AI assistant, all without duplication.

Moreover, headless CMSes typically come with powerful APIs (REST or GraphQL), enabling external consumers to efficiently retrieve content.

Machines need additional information (or metadata) to accurately infer the meaning behind content. Consistent tagging helps AI systems to figure out what a piece of content is about, who it’s for, and where it fits. Use consistent vocabularies for concepts like product type, target audience, and support category.

Using open formats like Schema.org, JSON-LD, or RSS can help AI tools interpret your content more accurately. These standards are already being used by Microsoft (NLWeb) and others to help AI navigate and retrieve web content more intelligently.

Next to that, old or conflicting information is a top reason AI assistants return wrong answers. Adding version info and “last updated” dates can help.

It’s just as important to mark deprecated content, set review cycles, and keep a clear trace of what’s archived versus active. Even better, implement these processes in your CMS and automate them, so when a product update happens, content owners are notified automatically as part of their workflow.

Bonus tip: use AI tools to optimize content for AI systems. For example, think about deploying an AI review agent that constantly monitors your content quality and notifies the authors responsible if things like tone-of-voice or writing style defer from guidelines as described in your design system.

The trend is clear: AI assistants and agents are quickly becoming the primary digital interface. To remain visible and relevant, your content must be structured, current, and machine-readable. If your information is hard to find or interpret, you’ll be left out of the conversation, literally.

The good news? You can start today. Remember: it is not necessary to overhaul your entire content library overnight. Keep focusing on existing SEO best-practices and move investments from page-composition and layout tooling to transforming your content infrastructure to use content models, infused with metadata, exposed through APIs in machine-optimized formats. Moreover, smarter review/monitoring processes help to automate quality optimizations at scale.

The next time someone says “SEO,” think broader: you’re now optimizing for machines, not just search engines.