The hidden cost driver in your AEM Cloud license: AI crawler traffic

AEM

Optimize

Barry d'Hoine

CXM Practice Lead

In recent months, many organizations running their digital experiences on AEM as a Cloud Service have been noticing something unexpected in their license dashboards: a sharp increase in content requests that doesn't correlate with actual visitor growth. The culprit? A massive surge in AI crawler traffic that's silently eating into license budgets, while leaving marketers caught between two competing priorities: remaining visible in the age of AI-powered search, and controlling infrastructure costs.

The AI traffic explosion

The numbers are staggering. According to Cloudflare's 2025 data, AI crawler traffic has grown by 18% year-over-year, with some individual crawlers showing growth rates exceeding 300%. Meta's AI crawlers alone now generate 52% of all AI crawler traffic, more than double the combined traffic from Google and OpenAI.

Fastly's 25Q2 Threat Insights Report reveals that AI bots now account for nearly 80% of all automated traffic observed across their network.

What makes this particularly impactful for AEM Cloud Service customers is how this traffic impacts licensing costs. Unlike traditional on-premise AEM where infrastructure costs are more or less fixed, AEMaaCS uses content requests as a primary pricing metric. According to Adobe's documentation, a content request is counted for any HTML or JSON request sent to AEM's Edge servers or your custom CDN that delivers full or partial content with an HTTP 200 OK response. The calculation is straightforward: 1 content request equals each page view or every 5 API calls, and only production environment traffic is tracked.

For enterprise AEM implementations with annual license fees ranging from $250,000 to over $1 million, the financial implications of unchecked AI traffic can be substantial.

The visibility paradox

Here's where things get complicated. We're simultaneously witnessing a fundamental shift in how consumers discover brands online. Adobe observed a 1,100% year-over-year increase in AI traffic to U.S. retail sites in September 2025. Visitors from generative AI sources were also 12% more engaged and 5% more likely to convert compared to traditional traffic sources like paid search and organic search.

As we discussed in our recent post on AI-ready content, the trend is clear: AI assistants and agents are quickly becoming the primary digital interface. Gartner projects that by 2028, brands could see a 50% or greater decline in organic traffic as consumers move away from traditional search.

This creates a genuine paradox for digital teams:

- Block AI crawlers to control license costs, but risk becoming invisible in the emerging AI-powered discovery landscape

- Allow all AI crawlers to maximize visibility, but watch content request counts and costs spiral upward

- Attempt selective blocking to find a middle ground, but face the challenge that not all crawlers respect robots.txt directives

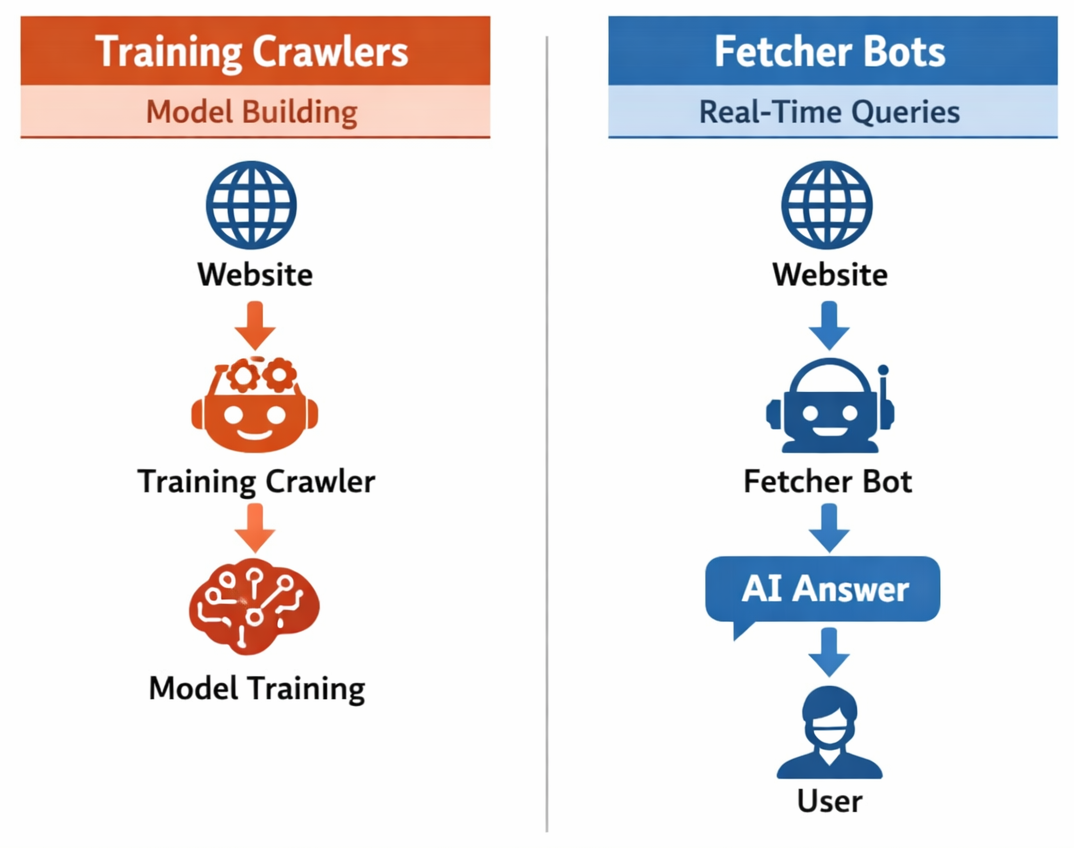

The reality is that AI bots serve different purposes. Training crawlers systematically scan websites to collect content for building language models. Fetcher bots, on the other hand, access content in real-time based on user queries, essentially delivering your content to potential customers through AI interfaces. Blocking the wrong type could mean disappearing from the conversations your customers are having with AI assistants.

Understanding what's actually hitting your AEM environment

Before implementing solutions, it's essential to understand the composition of your traffic. AEM as a Cloud Service provides several mechanisms for this analysis.

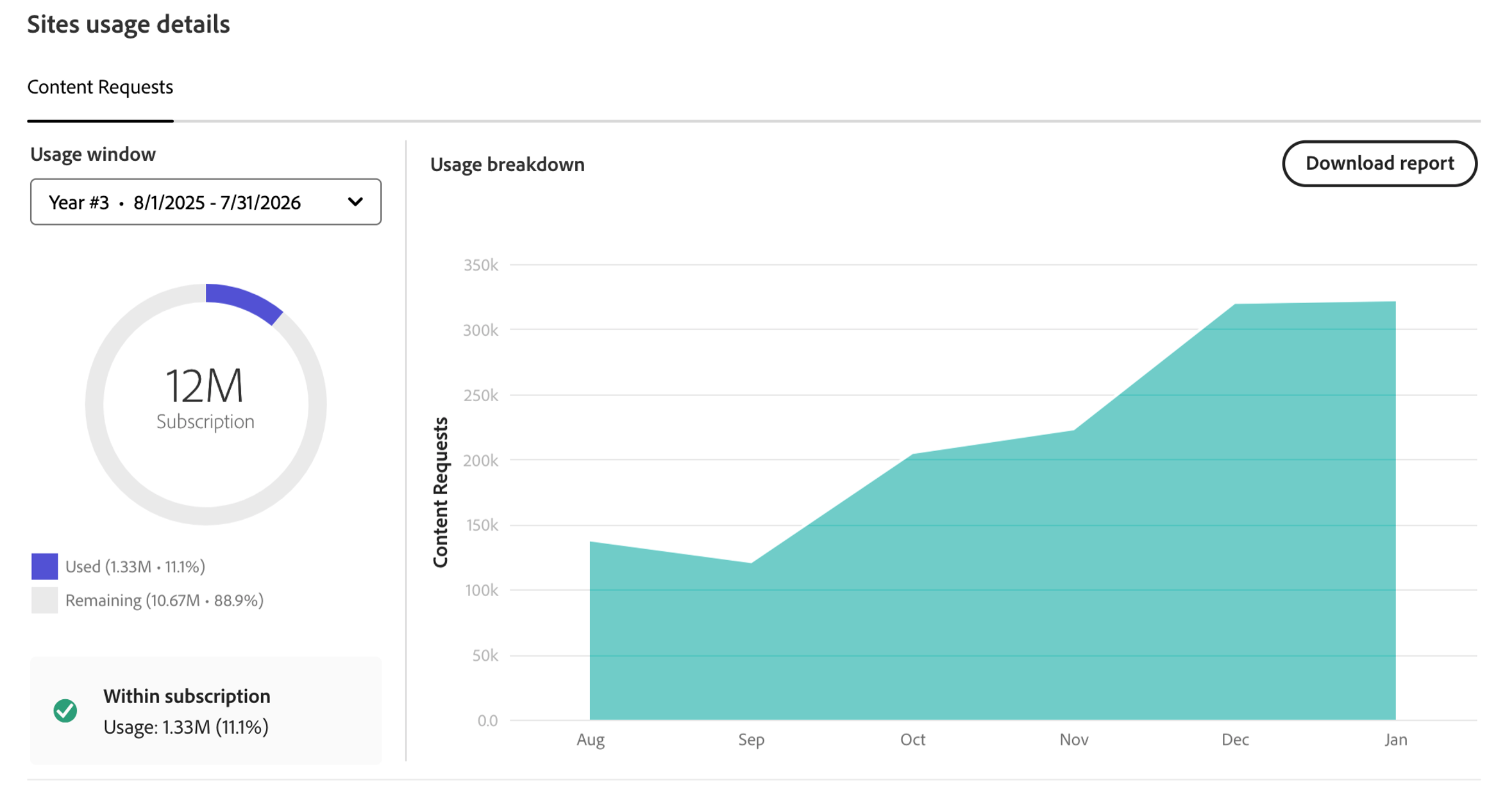

The License Dashboard in Cloud Manager provides a report on content request usage, though it currently offers overall traffic data rather than domain-specific breakdowns. For more detailed analysis, CDN logs can be accessed and analyzed using tools like Splunk or ELK.

What's already excluded from content requests

According to Adobe's documentation on Cloud Service content requests, well-known bots, URL monitors, etc. are already excluded from content request calculations. However, the category of "known bots" doesn't automatically include all AI crawlers. E.g. while Claudebot is explicitly listed, many other AI training and retrieval bots from companies like OpenAI and Meta may still count toward your content requests depending on how they identify themselves.

Update: since January 15th 2026, well-known AI/LLM crawlers have been added to the exclusion rules and are flagged as not billable.

Gaining visibility into agentic traffic with Adobe LLM Optimizer

Launched in October 2025, Adobe LLM Optimizer addresses the visibility gap by helping organizations understand how AI systems interact with their content. Rather than flying blind, it provides real-time insight into agentic traffic: activity from AI crawlers, LLM-based assistants, and AI-powered browsers.

The tool offers several analytical capabilities:

- Brand Presence dashboards showing how often your brand is mentioned and cited in AI-generated responses

- Agentic Traffic dashboards revealing which pages LLMs visit, how frequently, and from which AI platforms

- Crawl-to-refer ratio analysis helping you understand which AI interactions actually drive value back to your site

- URL Performance Analysis showing hits, unique agents, success rates, and categories for individual pages

This visibility is crucial for making informed decisions about which traffic to allow and which to block. The distinction between crawlers that only take and those that also give (by referring users to your site) should inform your filtering strategy.

A useful Splunk query to identify different user agents making requests to your AEM platform can complement this analysis, helping you identify specific crawlers and their relative impact on your content request counts.

Solution 1: Traffic filter rules and WAF configuration

AEM as a Cloud Service includes traffic filter rules that can be configured through the CDN layer. These rules act as a protective shield to help secure your site from abuse while also providing mechanisms to manage unwanted traffic.

Standard traffic filter rules are available to all AEM Cloud Service customers and can be configured in the cdn.yaml file within your project's config folder:

kind: "CDN"

version: "1"

metadata:

envTypes: ["prod"]

data:

trafficFilters:

rules:

- name: "block-blank-user-agent"

when:

allOf:

- reqProperty: tier

equals: "publish"

- reqHeader: User-Agent

equals: ""

action:

type: block

- name: "rate-limit-aggressive-crawlers"

when:

allOf:

- reqProperty: tier

equals: "publish"

action:

type: log

rateLimit:

limit: 100

window: 1

penalty: 60For organizations with the Extended Security license (formerly WAF-DDoS Protection), additional WAF traffic filter rules provide more sophisticated protection using flags like SCANNER, NOUA, and USERAGENT to detect and block suspicious automated traffic patterns.

The key insight here is that traffic blocked at the CDN layer doesn't count toward your content requests. This makes WAF and traffic filter rules not just a security measure, but a cost optimization tool.

Solution 2: BYOCDN for enhanced control

For organizations requiring more granular control over their CDN behavior, AEM as a Cloud Service supports Bring Your Own CDN (BYOCDN) configurations. While Adobe bundles Fastly CDN with AEMaaCS, customers can place their own CDN layer, such as Cloudflare, Akamai, or AWS CloudFront, in front of the Adobe infrastructure.

BYOCDN configurations offer several advantages for managing AI traffic:

Cloudflare, for example, provides a one-click option to block all AI scrapers and crawlers, along with AI Crawl Control features that offer more nuanced management. Their machine learning models can identify bots even when they spoof user agents, addressing the reality that many AI crawlers don't honestly identify themselves.

Akamai and Cloudflare both offer advanced bot management capabilities, including:

- Real-time bot detection and classification

- Rate limiting with sophisticated patterns

- Edge computing capabilities for custom logic

- Detailed bot traffic analytics

The key consideration with BYOCDN is that content request volume must still be reported annually to Adobe as stated in your contract. However, having your own CDN provides better tools to monitor, analyze, and control this traffic before it impacts your license allocation.

When configuring BYOCDN, ensure proper header forwarding including the X-AEM-Edge-Key for request validation and X-Forwarded-Host for proper AEM routing.

Solution 3: Strategic robots.txt configuration

While robots.txt is often the first recommendation for managing AI crawlers, it requires a strategic approach rather than blanket blocking. The file expresses your preferences to compliant bots, but as many have observed, not all AI crawlers respect these directives.

A balanced approach might look like:

# Allow search engine crawlers for SEO

User-agent: Googlebot

Allow: /

User-agent: Bingbot

Allow: /

# Block AI training crawlers that don't refer traffic back

User-agent: GPTBot

Disallow: /

User-agent: CCBot

Disallow: /

# Allow AI fetcher bots for visibility in AI search

User-agent: ChatGPT-User

Allow: /

User-agent: PerplexityBot

Allow: /The distinction between training crawlers (like GPTBot and CCBot) and fetcher bots (like ChatGPT-User) is crucial. Training crawlers collect data for model development and typically don't drive any traffic back to your site. Fetcher bots, conversely, access content in real-time to answer user queries and represent the new discovery channel that can drive engaged visitors.

However, robots.txt alone is insufficient because:

- Compliance is voluntary, and some bots ignore it entirely

- Bot operators can easily change user agent strings

- Some AI systems use previously indexed data rather than real-time fetching

This is why robots.txt should be part of a layered strategy rather than your sole defense.

Putting it all together: a layered strategy

Managing AI traffic in AEM as a Cloud Service requires a multi-pronged approach:

Layer 1: Monitoring and visibility

- Enable CDN log analysis using tools like Splunk or ELK

- Use the License Dashboard in Cloud Manager to track content request trends

- Implement Adobe LLM Optimizer to understand agentic traffic patterns and their business value

Layer 2: Traffic filtering

- Configure standard traffic filter rules to block suspicious patterns

- Implement rate limiting to prevent aggressive crawling behavior

- Consider Extended Security license for WAF capabilities if AI traffic is significantly impacting costs

Layer 3: Strategic access control

- Configure robots.txt to block training crawlers while allowing fetcher bots

- Consider BYOCDN for advanced bot management if native capabilities are insufficient

- Use LLM Optimizer's Optimize at Edge to serve AI-friendly content without CMS changes

Layer 4: Ongoing optimization

- Monitor the effectiveness of your rules through CDN logs

- Track brand presence in AI-generated responses through LLM Optimizer

- Adjust strategies as the AI landscape evolves

The bigger picture

The rise of AI crawler traffic represents a fundamental shift in how the web operates. As we noted previously, we're witnessing the emergence of a "Machine Web" in which content will increasingly be consumed by AI bots rather than humans directly.

For AEM as a Cloud Service customers, this shift brings both challenges and opportunities. The cost implications are real and require active management. But so too is the opportunity to establish brand presence in the new AI-powered discovery landscape.

The organizations that will thrive are those that find the right balance: controlling costs by filtering wasteful traffic while strategically enabling the AI interactions that drive genuine business value. With tools like traffic filter rules, Adobe LLM Optimizer, and BYOCDN options, AEM as a Cloud Service provides the building blocks for this balanced approach.

The key is to stop thinking about AI traffic as a single category and start treating it as a spectrum. From aggressive training crawlers that offer no value to real-time fetcher bots that represent your new customer acquisition channel, each type of AI traffic deserves its own strategy.

Are you experiencing unexpected content request growth in your AEM Cloud Service environment? We help organizations audit their traffic patterns, implement effective filtering strategies, and optimize their AI visibility.